Running A Script When Locking MATE

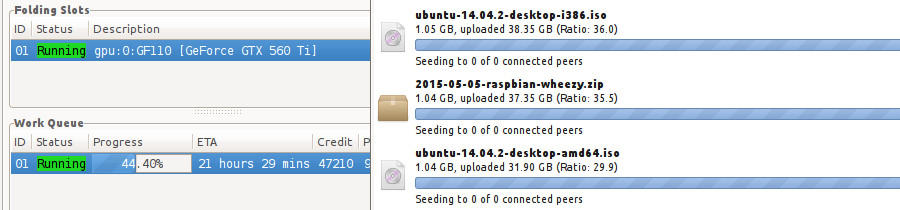

My PC is on literaly 24/7 as I run a FAH (Folding At Home) client and Transmission to seed the RPi and Ubuntu image torrents, but when I want to use my machine I have to pause them both as they bog the machine down.

This was a manual task I had to do every time I sat down and I had to remember to set them both going again when I was done, something I didn't always remember to do at 3AM after a session of "I'll just do a little more..."

Out of habit I lock my PC whenever I leave it and I thought that was an ideal trigger to pause/resume the FAH client and Transmission!